Intro

Background

For the past few years, I’ve been running NextCloud with its official S3 backend, which opened the door to the fascinating world of object storage. Backups and migrations have become a breeze, and the S3-style API brings a truly cloud-native feel to my homelab. But relying on remote cloud storage adds up fast—and it feels a bit at odds with the spirit of self-hosting.

Originally, I ran MinIO inside a Docker VM to get an S3-compatible store in my lab. That worked well at first, but over time the VM backups grew cumbersome, restore times dragged, and “noisy neighbor” workloads on the Proxmox host became a constant headache. Security concerns only added to my unease.

Why Change?

I needed a lean, resilient system that could:

- Sustain at least 1 Gbps throughput (2.5 Gbps preferred)

- Provide redundant storage (because disks do fail)

- Sip power and stay silent

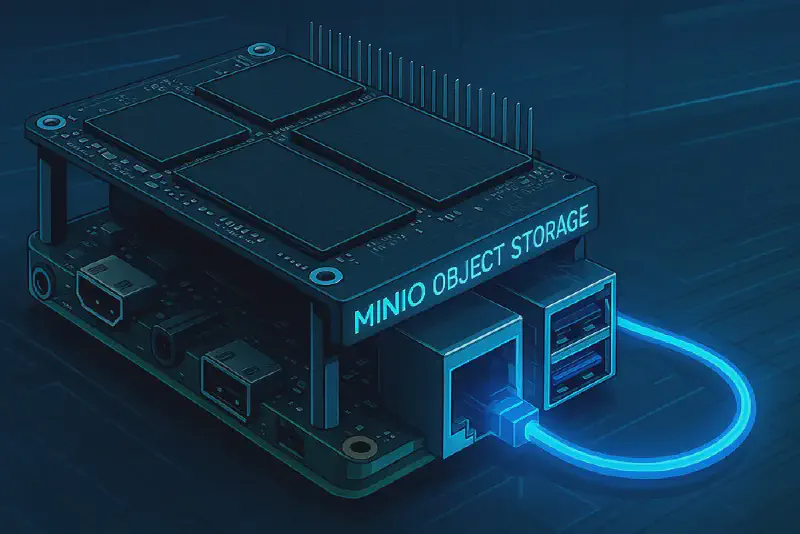

A new mini-PC with NVMe adapters felt like overkill for a handful of services—until I discovered the Raspberry Pi 5’s hidden strength: PCIe support. With a simple NVMe shield (e.g. Geekworm X1004), you can attach two drives and build a fully self-contained object store that ticks all the boxes.

The Raspberry Pi 5

- Bare-metal performance without VM overhead

- Low power draw and near-silent operation

- PCIe2.0×1 slot with a splitter-shield for dual NVMe SSDs

- All the benefits of a well-maintained Linux SBC Of course, two NVMes alone don’t give you redundancy—that’s where ZFS comes in.

Architecture

At the core of this stack sits a Raspberry Pi 5 (8 GB) running entirely bare-metal. Around it, we’ve assembled a fully automated, S3-compatible storage service with local redundancy and cloud-native workflows:

1. Storage Layer: ZFS on NVMe

- Two 1 TB NVMe SSDs mounted via PCIe 2.0×1 are mirrored under ZFS.

- The

trunk/minioZFS dataset serves as MinIO’s data directory, leveraging:- Checksummed self-healing

- Snapshots for point-in-time recovery

- Compression & deduplication

2. Object Store: MinIO + Terraform

- MinIO runs as a Single-Node Single-Drive (SNSD) S3-compatible server.

- Buckets, users, policies, and access keys are defined in code with the aminueza/minio Terraform provider, making every change versioned, auditable, and repeatable.

3. Networking & Security: UFW + NGINX

- UFW firewall allows only SSH (22), HTTP (80) and HTTPS (443).

- NGINX terminates TLS on 80/443 and reverse-proxies authenticated requests to MinIO on

localhost.

4. Backup & Monitoring: Borg + Cron + Uptime Kuma

A simple cronjob ties everything together:

- Create a ZFS snapshot of

trunk/minio. - Push incremental archives with Borg over SSH to a Hetzner Storage Box.

- Fire a success/failure webhook to Uptime Kuma for real-time alerts.

5. Configuration Management: Ansible

All system provisioning is codified in Ansible:

- OS updates and package installs (ZFS tools, MinIO, Borg, NGINX, UFW)

- ZFS pool & dataset creation and tuning

- MinIO binary deployment and service definitions

- Firewall rules and NGINX site configuration

- Borg repo initialization and cron scheduling

This design delivers a cloud-native S3 interface with local, ZFS-backed redundancy and end-to-end automation—hitting gigabit-class throughput, ensuring disk-level resiliency, and keeping power consumption and noise to an absolute minimum on a single Raspberry Pi. More detials regarding the setup with Ansible will follow in a second Part.